Abstract

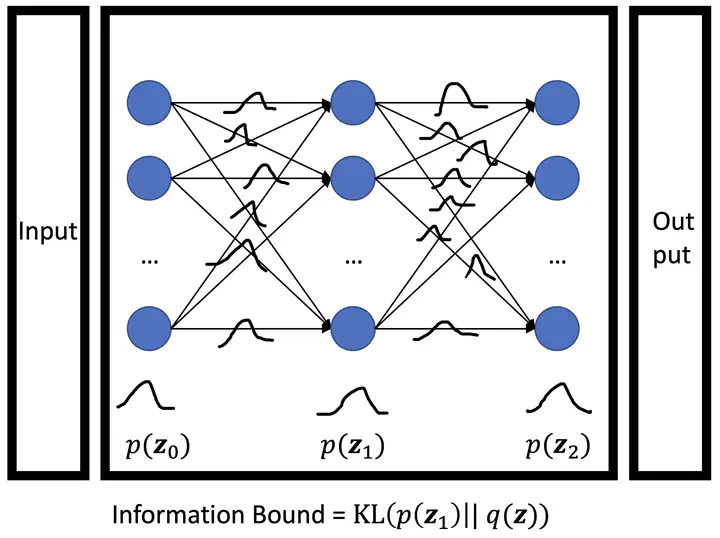

Bayesian neural networks (BNNs) have drawn extensive interest because of their distinctive probabilistic representation framework. However, despite its recent success, little work focuses on the information-theoretic understanding of Bayesian neural networks. In this paper, we propose Information Bound as a metric of the amount of information in Bayesian neural networks. Different from mutual information on deterministic neural networks where modification of network structure or specific input data is usually necessary, Information Bound can be easily estimated on current Bayesian neural networks without any modification of network structures or training processes. By observing the trend of Information Bound during training, we demonstrate the existence of the ``critical period’’ in Bayesian neural networks. Besides, we show that the Information Bound can be used to judge the confidence of the model prediction and to detect out-of-distribution datasets. Based on these observations of model interpretation, we propose Information Bound regularization and Information Bound variance regularization methods. The Information Bound regularization encourages models to learn the minimum necessary information and improves the model generality and robustness. The Information Bound variance regularization encourages models to learn more about complex samples with low Information Bound. Extensive experiments on KMNIST, Fashion-MNIST, CIFAR-10, and CIFAR-100 verify the effectiveness of the proposed regularization methods.